In computer science academia, C along with UNIX, and PDP11s, is as unfashionable as can be: the equivalent of other 1970’s relics, like polyester leisure suits and mullets.

In 2018 ACM Queue published an essay by David Chisnall called: C Is Not a Low-level Language: Your computer is not a fast PDP-11 that expresses many common misconceptions about C and also takes the time to make a bunch of incorrect claims about computer architecture. The same authors 2015 ASPLOS paper Beyond the PDP-11: Architectural Support for a Memory-Safe C Abstract Machine has much more interesting information about how C is used in practice and about an alternative computer architecure, but I want to focus on the first, because I think the general tendency in academic Computer Science to dismiss C as an embarassing fashion faux-pas has important consequences.

Let’s go through some of Chisnall’s article, starting with this claim:

“Most of the complexity involved [in hardware caches] comes from supporting a language [C] in which data is expected to be both shared and mutable as a matter of course.”

Although the recent C11 standard tried to graft on some ideas about concurrency (not a good idea or well done either), historically C doesn’t have have any notion of concurrency or data sharing1. The first wide use of “threading” in C applications involved UNIX fork/exec which doesn’t share any memory at all. The language designers made a conscious, and successful, choice to leave such things outside the scope of the language.

The poor judgment of the C11 authors didn’t really change that.

“Creating a new thread [in C ] is a library operation known to be expensive,

C has threading libraries, but none of them is required (otherwise you couldn’t develop operating systems in C or support multiple thread models). C thread creation is also not known to be expensive. Determining factors are implementations, processor architectures, and, for user threads, operating system interfaces.

so processors wishing to keep their execution units busy running C code rely on ILP (instruction-level parallelism).”

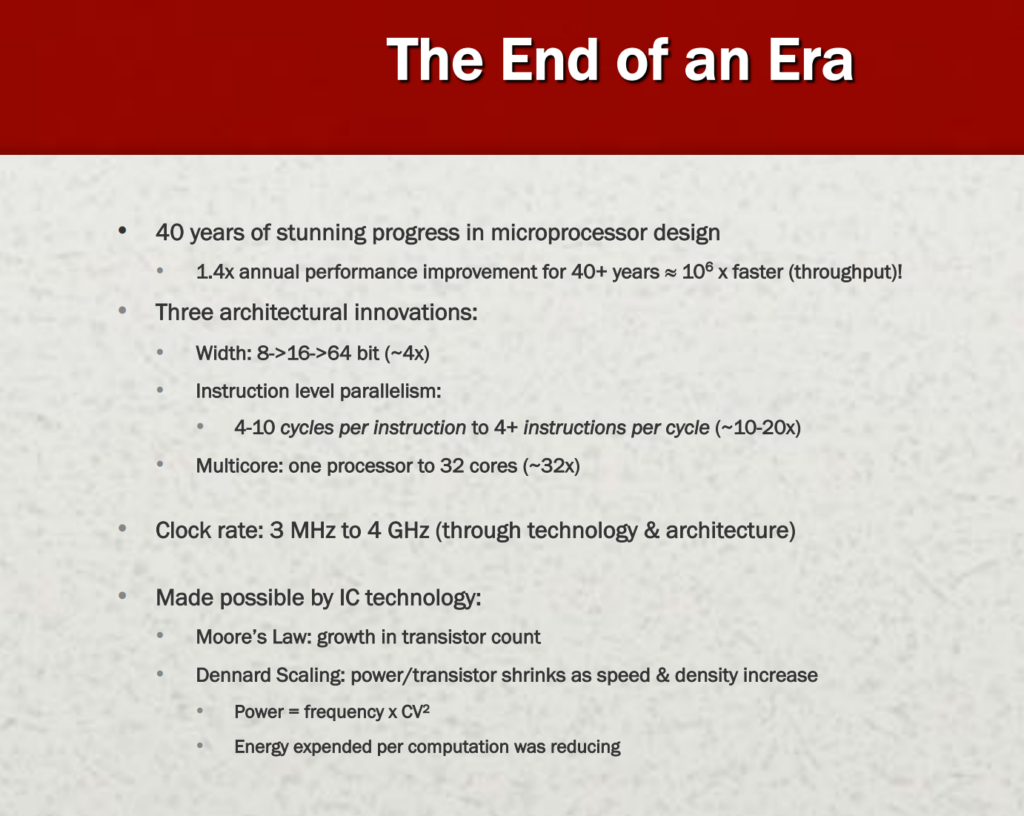

Instruction level parallelism predates C by 10 years and is not specific to any programming language (see below).

In the wake of the recent Meltdown and Spectre vulnerabilities, it’s worth spending some time looking at root causes. Both of these vulnerabilities involved processors speculatively executing instructions past some kind of access check and allowing the attacker to observe the results via a side channel. The features that led to these vulnerabilities, along with several others, were added to let C programmers continue to believe they were programming in a low-level language, when this hasn’t been the case for decades.

The first production computer with ILP and speculation was the CDC 6600, brought to market in 1964 and there was speculative branch prediction in the IBM 360/91 (1967). C was developed in the 1970s, well after and only became popular in the 1980s or later.

The root cause of the Spectre and Meltdown vulnerabilities was that processor architects were trying to build not just fast processors, but fast processors that expose the same abstract machine as a PDP-11. This is essential because it allows C programmers to continue in the belief that their language is close to the underlying hardware. In contrast, GPUs achieve very high performance without any of this logic, at the expense of requiring explicitly parallel programs.

Let’s go through these:

- GPUs are primarily programmed in C dialects, so I’m not sure what is going on there. Using C was the easy choice for GPU makers because of C’s deliberately incomplete nature (see below).

- Why would anyone think that what C programmers believe about C would matter to computer architects? It does not.

- The root cause of the Spectre and Meltdown failures is that it is really hard to do out-of-order pipelines with speculative execution and buffered memory access (there is way too much state moving too fast) but the performance advantage makes it hard to pass up.

- As for PDP-11s, this is a common error. C was ported to IBM/370, Honeywell 6000, Inter data 8/32, Sel86, Data General Nova, Vax and other processors in the mid 1970s – back in the days when there were multiple very different machine architectures. By the mid 1980s, when C was becoming a popular language, and the ANSI standard was developed the successor VAX architecture supported SMP and C was already highly popular on microprocessors. Much of what changed in C in that era was to make it portable to that wild profusion of architectures. And really widespread use of C began with Motorola 68K powered Suns (1 stunning megahertz or even better) and similar.

It’s worth noting that Chisnall knows this last complaint is wrong, as he writes later:

The C model, in contrast, was intended to allow implementation on a variety of targets, including segmented architectures (where a pointer might be a segment ID and an offset) and even garbage-collected virtual machines.

In any case, although dismissing the PDP11 is in vogue, the PDP-11 always had an MMU, incorporated caches by the mid 1970s, and had parallel processing (with DMA capable devices and co-processors). Although the article subhead is “Your computer is not a fast PDP-11”, really it is pretty much – just vastly larger (in terms of transistors ), and faster. Processor architectures today incorporate a lot of innovation, but use the same basic model programmers encountered 50 years ago and X8–64 and ARM are both much closer to the PDP-11, for good or ill, than to the 36 bit Honeywell 6000, the Interdata 8/32 , or the Intel 8086 and I486 for that matter.

Let Professor Hennessy explain:

But Chisnall is not done with C.

We have a number of examples of designs that have not focused on traditional C code to provide some inspiration. For example, highly multithreaded chips, such as Sun/Oracle’s UltraSPARC Tx series, don’t require as much cache to keep their execution units full. Research processors2 have extended this concept to very large numbers of hardware-scheduled threads. The key idea behind these designs is that with enough high-level parallelism, you can suspend the threads that are waiting for data from memory and fill your execution units with instructions from others. The problem with such designs is that C programs tend to have few busy threads.

None of these are new or untested ideas. The Cray MTA architecture was a really interesting design that was even built and commercialized and it was designed to run C and Fortran. The problem with such designs is that highly threaded algorithms are difficult and sometimes impossible (see Amdahl’s Law) in general, although not in special circumstances (e.g. GPUs where C is used!).

For example, in C, processing a large amount of data means writing a loop that processes each element sequentially

In the Von Neumann style computer architecture (every single existing commercial processor), data is stored in memory and must be brought through the “Von Neumann bottleneck” into the processor. Caches help, and for certain types of processing, data can be moved and modified in larger chunks (vectors) which can be modified in parallel (SIMD processing is another 1960s innovation as explained in most intro computer architecture courses). When the processor can operate on those longer vectors, it still relies on loops with the same characteristics as loops over bytes for large amounts of data. Dennis Ritchie thought that C was too limited in its treatment of arrays, but being able to e.g. apply a map to an array in the programming language just hides the loop. Writing  doesn’t magically transfer the whole array into the CPU vector unit which can, at best, multiply only a few elements at a time. For big arrays, it is still necessary to loop through the data, and for small arrays, there is not much to win ( here are some other ideas). Chisnall knows this too, because he’s unhappy at how hard it is to compile vector loops in C.

doesn’t magically transfer the whole array into the CPU vector unit which can, at best, multiply only a few elements at a time. For big arrays, it is still necessary to loop through the data, and for small arrays, there is not much to win ( here are some other ideas). Chisnall knows this too, because he’s unhappy at how hard it is to compile vector loops in C.

To run this optimally on a modern CPU, the compiler must first determine that the loop iterations are independent. The C

restrictkeyword can help here. It guarantees that writes through one pointer do not interfere with reads via another (or if they do, that the programmer is happy for the program to give unexpected results). This information is far more limited than in a language such as Fortran, which is a big part of the reason that C has failed to displace Fortran in high-performance computing.

Intel’s comments on the problems of vectorizing C and FORTRAN say the opposite. Vectorization is a hard problem – C’s restrict keyword is a good clue to the compiler, and possibly a vector data type would help (as the author argues) but FORTRAN doesn’t have better information for the compiler. What FORTRAN has (a) no pointers which makes things simpler and (b) a whole lot of special purpose compiler directives and expert programmers who know how to exploit data parallelism. FYI: C was never intended to displace Fortran in HPC.

Unfortunately, simple translation providing fast code is not true for C. In spite of the heroic efforts that processor architects invest in trying to design chips that can run C code fast, the levels of performance expected by C programmers are achieved only as a result of incredibly complex compiler transforms. The Clang compiler, including the relevant parts of LLVM, is around 2 million lines of code. Even just counting the analysis and transform passes required to make C run quickly adds up to almost 200,000 lines (excluding comments and blank lines).

Much of Clang’s complexity comes from its role compiling C++ which is a gigantic language that incorporates C as a sublanguage. Additionally, LLVM is designed as a general platform for a number of languages and LLVM’s complexity is far from C specific. What would be very interesting to know is how much of Clang’s C performance comes from anything past the 40 year old basic optimization methods like register coloring, peephole optimization, vectorizing and maybe unrolling loops. But here we turn to a real issues:

In C, a read from an uninitialized variable is an unspecified value and is allowed to be any value each time it is read. This is important, because it allows behavior such as lazy recycling of pages: for example, on FreeBSD the

mallocimplementation informs the operating system that pages are currently unused, and the operating system uses the first write to a page as the hint that this is no longer true. A read to newlymalloced memory may initially read the old value; then the operating system may reuse the underlying physical page; and then on the next write to a different location in the page replace it with a newly zeroed page. The second read from the same location will then give a zero value.

This is an interesting point, because it reveals a common problem in current criticisms of C – and of the current efforts of the C Standards and C Compilers: a failure to understand the open border C design or maybe just dislike for it. C was intended as a systems and particularly operating systems language. Unlike Java and most other programming languages, a C program interacts directly with the hardware (and operating system) and other interfaces. Stephen Kell has a paper on precisely this point. That’s why C has no foreign function interface (FFI) but can directly call out to assembler or even support in-line assembler (and C doesn’t have an unsafe mode, because it is an unsafe mode). Although the C standard document goes on about the “abstract machine”, C gets a lot of its utility from being able use operations that are semantically defined by hardware or other external interfaces. C benefits from incomplete semantics (which is why the ongoing mess about undefined behavior is so damaging to C programmers). In this case, the FreeBSD operating system is playing games with the page allocator so allocated pages may not act like abstract store until a write operation2. This should not matter to a C program, because, especially in OS programming, this kind thing is not unusual. Weird interactions with memory are one reason why C has the concept of intederminate values. C code can even read device memory that changes its value on each read (think of a hardware fifo from a Analog-to-Digital sensor, for example). In these cases, the C compiler is supposed to let C operate as a high level assembly language and stay out of it.

And then Chisnall makes a really good point.

If an unspecified value for flow control is used (for example, using it as the condition in an

ifstatement), then the result is undefined behavior: anything is allowed to happen. Consider the loop-unswitching optimization, this time in the case where the loop ends up being executed zero times. In the original version, the entire body of the loop is dead code. In the unswitched version, there is now a branch on the variable, which may be uninitialized. Some dead code has now been transformed into undefined behavior. This is just one of many optimizations that a close investigation of the C semantics shows to be unsound.

The important observation here is that under the current interpretation of the C standard, a number of compiler optimizations are unsound and have been for some time. This has been tolerated because C is so very out of fashion and there is little appreciation of the basic design concepts of the C language (except from people who use it.) What is going on here is that standards contributors and compiler developers who start with the assumption that C is an obsolete and semantically confused language feel no  obligation to play fair with the programmers who they believe are making a mistake even wanting to write code in the language. This is why Chris Lattner of LLVM ends his gloomy (and insightful) analysis of the state of C compilation with a suggestion that programmers should switch to another language (like his own). And that is unfortunate because C is far from a perfect language and slow changes in computer architecture are frustrating, but if you don’t know what works and what doesn’t work, you won’t be able to fix anything. And, finally, some things from the 1970s were pretty good.

obligation to play fair with the programmers who they believe are making a mistake even wanting to write code in the language. This is why Chris Lattner of LLVM ends his gloomy (and insightful) analysis of the state of C compilation with a suggestion that programmers should switch to another language (like his own). And that is unfortunate because C is far from a perfect language and slow changes in computer architecture are frustrating, but if you don’t know what works and what doesn’t work, you won’t be able to fix anything. And, finally, some things from the 1970s were pretty good.

NOTE 1: The unnecessary complexity of shared data in caches is unpleasant but that’s not C’s fault.

NOTE 2: The much loathed Bourne Shell, in addition to using the pre-processor to pretend it was written in ALGOL for unknown reasons, also implemented a user mode virtual memory with C/UNIX signals. It allocated virtual memory addresses without bothering to call the OS for more memory, and then caught write traps and tried to fix things.